Linux perf is a fantastically useful tool for all sorts of profiling tasks. It’s a statistical profiler that works by capturing the program counter value when a particular performance event occurs. This event is typically generated by a timer (e.g. 1kHz) but can be any event supported by your processor’s PMU (e.g. cache miss, branch mispredict, etc.). Try perf list to see the events available on your system.

A table of raw program counter addresses isn’t particularly useful to humans so perf needs to associate each address with the symbol (function) that contains it. For ahead-of-time compiled programs and shared libraries perf can look this up in the ELF symbol table on disk, but for JIT-compiled languages like Java this information isn’t available as the code is generated on-the-fly in memory.

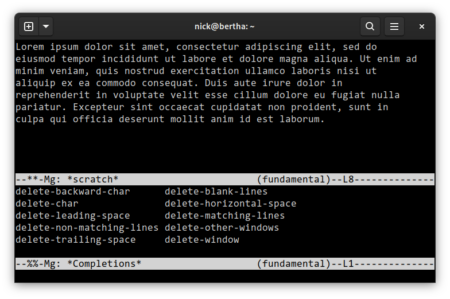

Let’s look at what perf top reports while running a CPU-intensive Java program:

Samples: 136K of event 'cycles', 4000 Hz, Event count (approx.): 57070116973 lost: 0/0 drop: 0/0

Overhead Shared Object Symbol

16.33% [JIT] tid 41266 [.] 0x00007fd733e40ec0

16.15% [JIT] tid 41266 [.] 0x00007fd733e40e3b

16.14% [JIT] tid 41266 [.] 0x00007fd733e40df1

16.14% [JIT] tid 41266 [.] 0x00007fd733e40e81

2.80% [JIT] tid 41266 [.] 0x00007fd733e40df5

2.62% [JIT] tid 41266 [.] 0x00007fd733e40e41

2.45% [JIT] tid 41266 [.] 0x00007fd733e40ec4

2.43% [JIT] tid 41266 [.] 0x00007fd733e40e87

Perf marks these locations as [JIT] because the addresses are in part of the process’s address map not backed by a file. Because the addresses are all very similar we might guess they’re in the same method, but perf has no way to group them and shows each unique address separately. Apart from that it’s not very helpful for figuring out which method is consuming all the cycles.

As an aside, it’s worth briefly comparing perf’s approach, which samples the exact hardware instruction being executed when a PMU event occurs, with a traditional Java profiler like VisualVM which samples at the JVM level (i.e. bytecodes). A JVM profiler needs to interrupt the thread, then record the current method, bytecode index, and stack trace, and finally resume the thread. Obviously this has larger overhead but there is a deeper problem: JIT-ed code cannot be interrupted at arbitrary points because the runtime may not be able to accurately reconstruct the VM state at that point. For example one cannot inspect the VM state halfway through executing a bytecode. So at the very least the JIT-ed code needs to continue executing to the end of the current bytecode. But requiring the VM to be in a consistent state at the end of each bytecode places too many restrictions on an optimising JIT. Therefore the optimised code can typically only be interrupted at special “safepoints” inserted by the JIT – in Hotspot this is at method return and loop back-edges. That means that a JVM profiler can only see the thread stopped at one of these safepoints which may deviate from the actual hot parts of the code, sometimes wildly. This problem is known as safepoint bias.

So a hardware profiler can give us better accuracy, but how to translate the JIT-ed code addresses to Java method names? Currently there are at least two tools to do this, both of which are implemented as JVMTI plugins that load into a JVM process and then dump some metadata for perf to use.

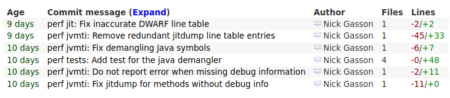

The first is the “jitdump” plugin that is part of the Linux perf tree. After being loaded into a JVM, the plugin writes out all the machine code and metadata for each method that is JIT compiled. Later this file can be combined with recorded profile data using perf inject --jit to produce an annotated data file with the correct symbol names, as well as a separate ELF shared object for each method allowing perf to display the assembly code. I use this often at work when I need to do some detailed JIT profiling, but the offline annotation step is cumbersome and the data files can be 100s of MB for large programs. The plugin itself is complex and historically buggy. I’ve fixed several of those issues myself but wouldn’t be surprised if there are more lurking. This tool is mostly overkill for typical Java developers.

The second tool that I’m aware of is perf-map-agent. This generates a perf “map” file which is a simple text file listing start address, length, and symbol name for each JIT compiled method. Perf will load this file automatically if it finds one in /tmp. As the map file doesn’t contain the actual instructions it’s much smaller than the jitdump file, and doesn’t require an extra step to annotate the profile data so it can be used for live profiling (i.e. perf top). The downsides are that profile data is aggregated by method so you can’t drill down to individual machine instructions, and the map can become stale with a long-running VM as methods can be unloaded or recompiled. You also need to compile the plugin yourself as it’s not packaged in any distro and many people would rightly be wary of loading untrusted third-party code which has full access to the VM. So it would be much more convenient if the VM could just write this map file itself.

OpenJDK 17, which should be released early next month, has a minor new feature contributed by yours truly to do just this: either send the diagnostic command Compiler.perfmap to a running VM with jcmd, or run java with -XX:+DumpPerfMapAtExit, and the VM will write a perf map file that can be used to symbolise JIT-ed code.

$ jps

40885 Jps

40846 PiCalculator

$ jcmd 40846 Compiler.perfmap

40846:

Command executed successfully

$ head /tmp/perf-40846.map

0x00007ff6dbe401a0 0x0000000000000238 void java.util.Arrays.fill(int[], int)

0x00007ff6dbe406a0 0x0000000000000338 void PiSpigout.calc()

0x00007ff6dbe40cc0 0x0000000000000468 void PiSpigout.calc()

0x00007ff6dbe41520 0x0000000000000138 int PiSpigout.invalidDigitsControl(int, int)

0x00007ff6d49091a0 0x0000000000000110 void java.lang.Object.<init>()

0x00007ff6d4909560 0x0000000000000350 int java.lang.String.hashCode()

0x00007ff6d4909b20 0x0000000000000130 byte java.lang.String.coder()

0x00007ff6d4909ea0 0x0000000000000170 boolean java.lang.String.isLatin1()

Here we can see for example that the String.hashCode() method starts at address 0x00007ff6d4909560 and is 0x350 bytes long. Let’s run perf top again:

Samples: 206K of event 'cycles', 4000 Hz, Event count (approx.): 94449755711 lost: 0/0 drop: 0/0

Overhead Shared Object Symbol

78.78% [JIT] tid 40846 [.] void PiSpigout.calc()

0.56% libicuuc.so.67.1 [.] icu_67::RuleBasedBreakIterator::handleNext

0.34% ld-2.31.so [.] do_lookup_x

0.30% libglib-2.0.so.0.6600.3 [.] g_source_ref

0.24% libglib-2.0.so.0.6600.3 [.] g_hash_table_lookup

0.24% libc-2.31.so [.] __memmove_avx_unaligned_erms

0.20% libicuuc.so.67.1 [.] umtx_lock_67

This is much better: now we can see that 79% of system-wide cycles are spent in one Java method PiSpigout.calc().

Alternatively we can do offline profiling with perf record:

$ perf record java -XX:+UnlockDiagnosticVMOptions -XX:+DumpPerfMapAtExit PiCalculator 50

3.1415926535897932384626433832795028841971693993750

[ perf record: Woken up 1 times to write data ]

[ perf record: Captured and wrote 0.030 MB perf.data (230 samples) ]

$ perf report

Samples: 340 of event 'cycles', Event count (approx.): 71930116

Overhead Command Shared Object Symbol

11.83% java [JIT] tid 44300 [.] Interpreter

4.19% java [JIT] tid 44300 [.] flush_icache_stub

3.87% java [kernel.kallsyms] [k] mem_cgroup_from_task

...

Here the program didn’t run long enough for any method to be JIT compiled and we see most of the cycles are spent in the interpreter. (By the way, the interpreter still looks like JIT-ed code to perf because its machine code is generated dynamically by Hotspot at startup.)

I’d be interested to hear from anyone who tries this new feature and finds it useful, or otherwise!

Posts

Posts